DevOps… CI/CD… Docker… Kubernetes… I’m sure you’ve been bombarded with these words a lot the past year. Seems like the entire world is talking about it. The rate at which this segment is progressing, it won’t be long before we reach the stage of NoOps.

Don’t worry. It’s okay to feel lost in the giant sea of tools and practices. It’s about time we break down what DevOps really is.

The objective of this article is to set a solid foundation for you to build on top of. So let’s start with the obvious question.

What is DevOps?

DevOps is the simplification or automation of established IT processes.

You can probably see where I’m going from here.

I’ve seen so many people start this journey to adopt DevOps to only find themselves lost. There seems to be a pattern to this.

It usually starts with a video on how a fancy tech startup has automated its release cycle. Deployments happen automatically once all the tests pass. Rollbacks, in case of failures, is automatic. Thousands of simultaneous A/B test is driving up customer engagement.

Let’s be honest. We all want to achieve DevOps “Moksha”.

We all are tired of releasing a new version like its a rollercoaster ride.

Unfortunately, DevOps doesn’t work that way. DevOps isn’t a magic wand which can solve all your problems in an instant.

Instead, it is a systematic process of choosing the right tools and technology to get the job done.

All of this starts with a process.

It doesn’t matter what the process is. It could be simplifying the deployment of your app or automate testing. Whatever your process is, the act of making your life easier is what DevOps is all about.

But you always need to start with a process.

In fact, if your process cannot be done manually (on a smaller scale), you should probably re-examine your process.

I mean it.

Enough talking. Let’s take a real world example to understand things better.

Let’s take a Real DevOps Example

Let’s take a simple example of making a Nodejs app live on a VM in the cloud.

The Process

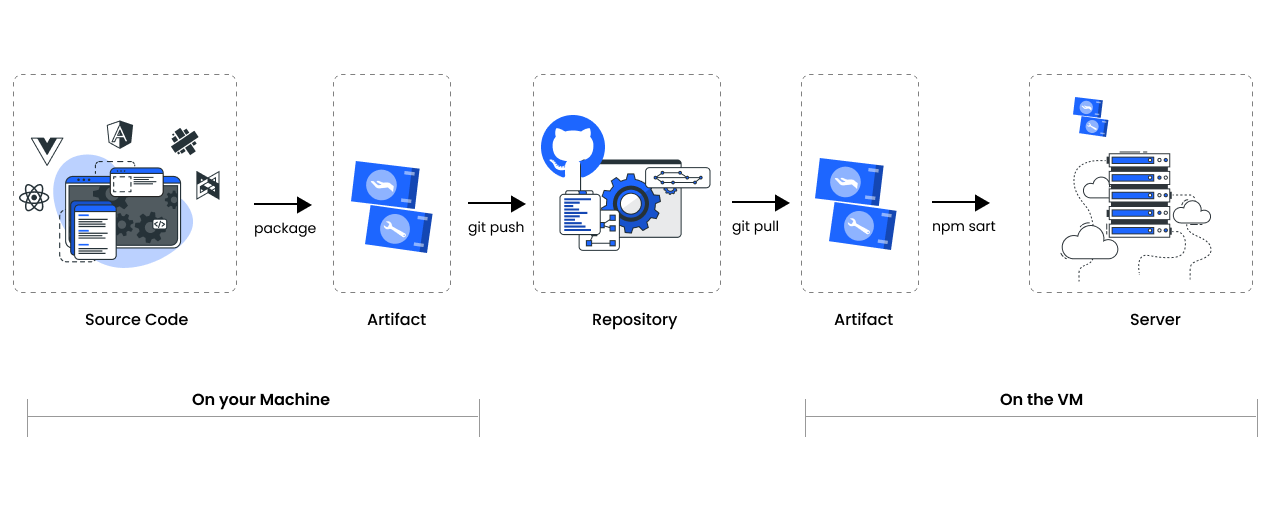

Here’s what our process looks like:

- Start with the source code: This is our source of truth. We can run our process from anywhere as long as we have access to the source code.

- Build an Artifact: We then package our source code to build an Artifact. In case of a compiled language, the compiled output (JAR file in case of JAVA) would be our artifact. In our case, our source code itself is the artifact to be released.

- Publish to an Artifact Repository: Next, we push our artifact to a repository. This is a location from where our target environment can pull the artifact from. We could stick with something like Github since we are working with source code here.

- Pull and run your app: Finally, we pull the artifact onto our VM and schedule a Nodejs process by running

npm start.

It’s okay if you do things a slightly different way. We are here to focus on the journey and not the destination.

Our first DevOps Project

Let’s not do anything fancy here.

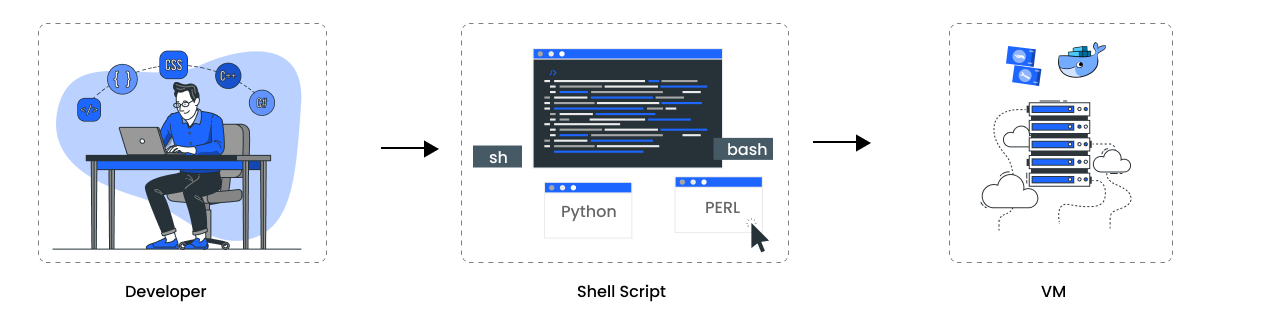

The easiest way to automate this process would be to write a simple shell script to run all the commands in sequence.

Congratulations!!! That’s our first DevOps project!!! 🥳🎉

I know shell scripts sounds too simple to be taken seriously. I suspect you already have such scripts in place. But believe me, that’s DevOps!

Don’t worry, we will get to the fancy stuff in a minute. But it’s important to understand that this is how DevOps works.

Importance of Repeatability

Let me ask you one question. Which one of these would you prefer?

- An automated deployments pipeline which works 60% of the time or

- A boring shell script which gets the job done every time its executed

If you have dealt with production failures in the middle of the night, you’ll choose the shell script.

The reason is simple.

Reliability is far more critical than degree of automation.

In other words,

A DevOps process must be able to produce consistent results every time it’s run.

Making our process repeatable

Let’s take the example of our shell script.

Currently, our shell script depends on Nodejs to be installed on the VM we want to deploy the app to.

What would happen if the Nodejs runtime was missing? These days, an incorrect version of the runtime is enough to break our application.

This problem only gets worse in polyglot environments where we deal with multiple programming languages.

A simple solution would be to archive the Nodejs runtime along with our source code in a zip file. The zip file can then be sent to the VM. This way, the VM can use the local Nodejs runtime present in the archive to run our app.

Luckily, there is a tool to make our lives easier.

In comes Docker and Containers

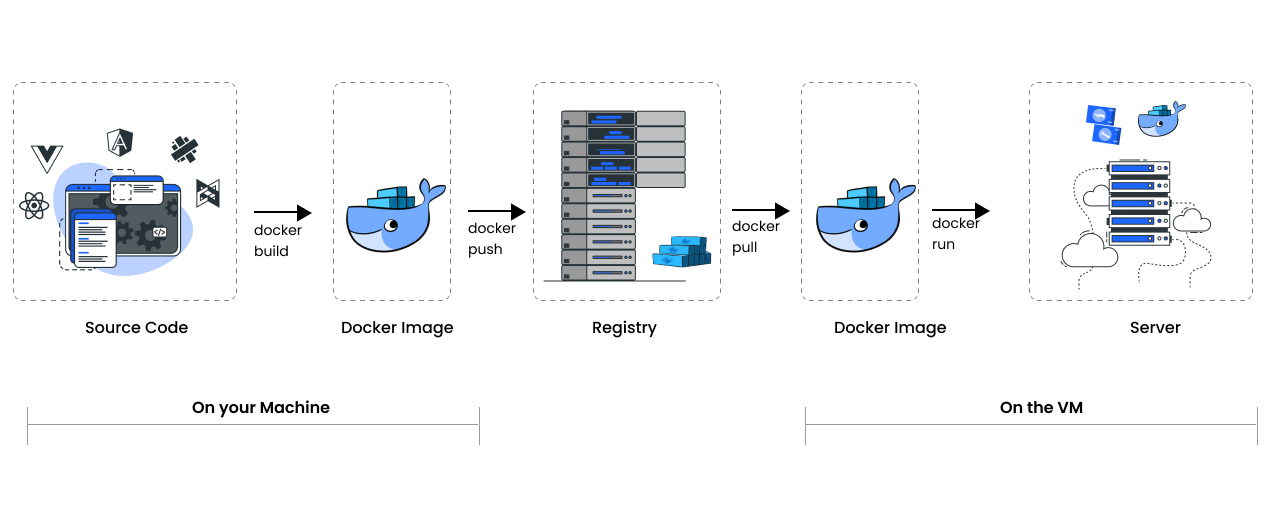

If you are new to this, think of Docker as a way to package your artifact along with all its OS dependencies, including Nodejs, into a container image.

Using containers, we can deploy any application on a VM which has Docker installed.

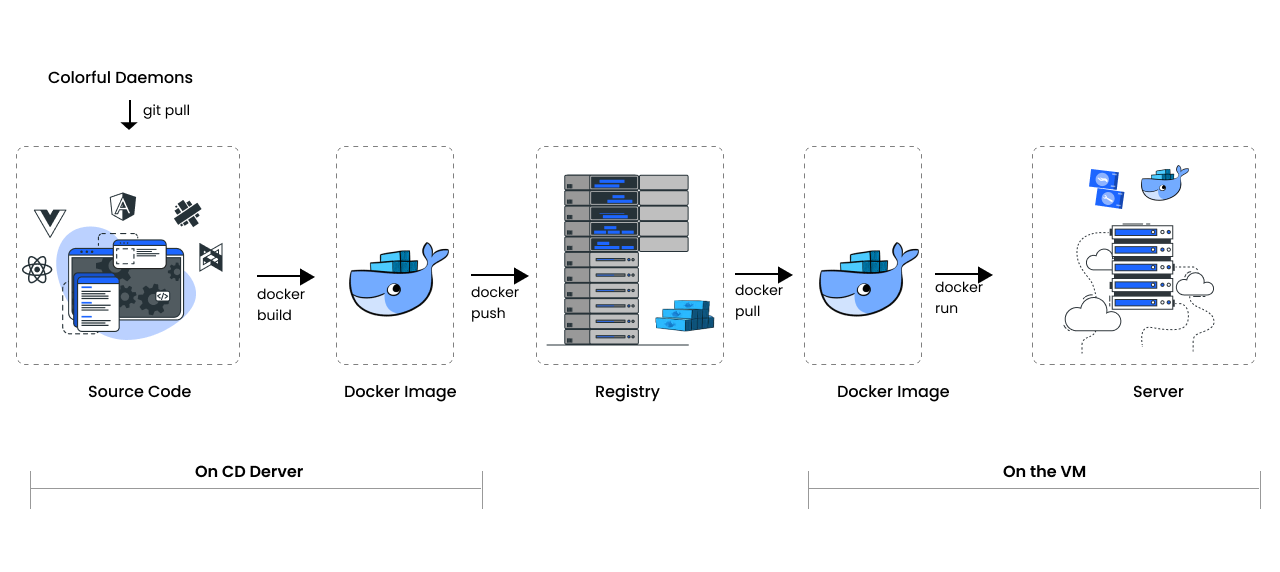

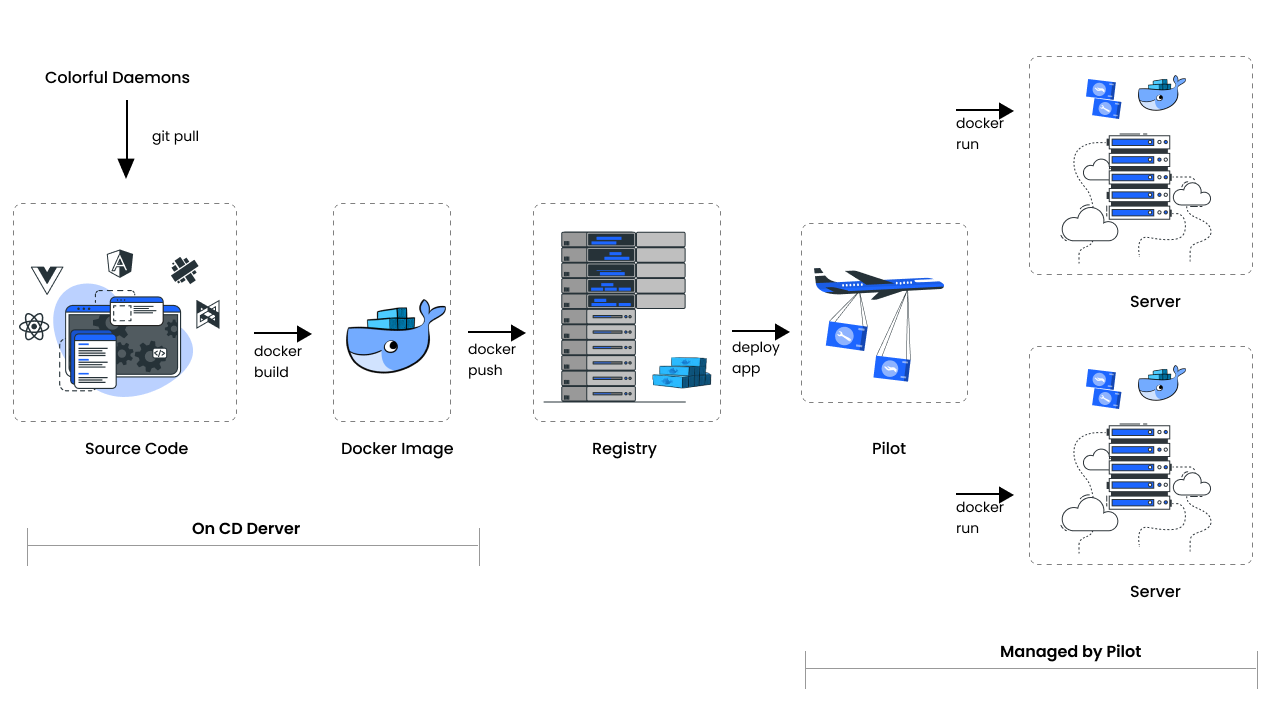

With Docker, our flow will look something like this:

There is a lot more to containers than just this. However, this was one of the reasons why containers got so popular.

Docker Vs Containers

Let me clarify this. Docker and containers are not the same things anymore.

Docker is a set of utility tools to build and ship container images which container runtimes like containerd use to make and run containers.

Many are concerned about the future of Docker given the recent events which have taken place.

It is important to understand that Docker is not going anywhere anytime soon. It provides the best DX and will continue to play a major role in building and shipping container images.

Getting Serious with DevOps

We have made some serious progress already. Hopefully, we understand how Docker fits into the DevOps process.

Its time to take things to the next level.

Triggering deployment based on events

Our script looks pretty solid but it’s still triggered manually.

Wouldn’t it be great if we could trigger this script automatically whenever someone pushes code on GitHub? In other words, we want to trigger this script on an event.

GitHub can invoke webhooks on a certain set of events.

To achieve this, we need to make a simple HTTP server that executes our shell script whenever its endpoint is hit. We can configure GitHub to hit our endpoint on the Push Event.

Let’s call this server Colorful Daemons or CD.

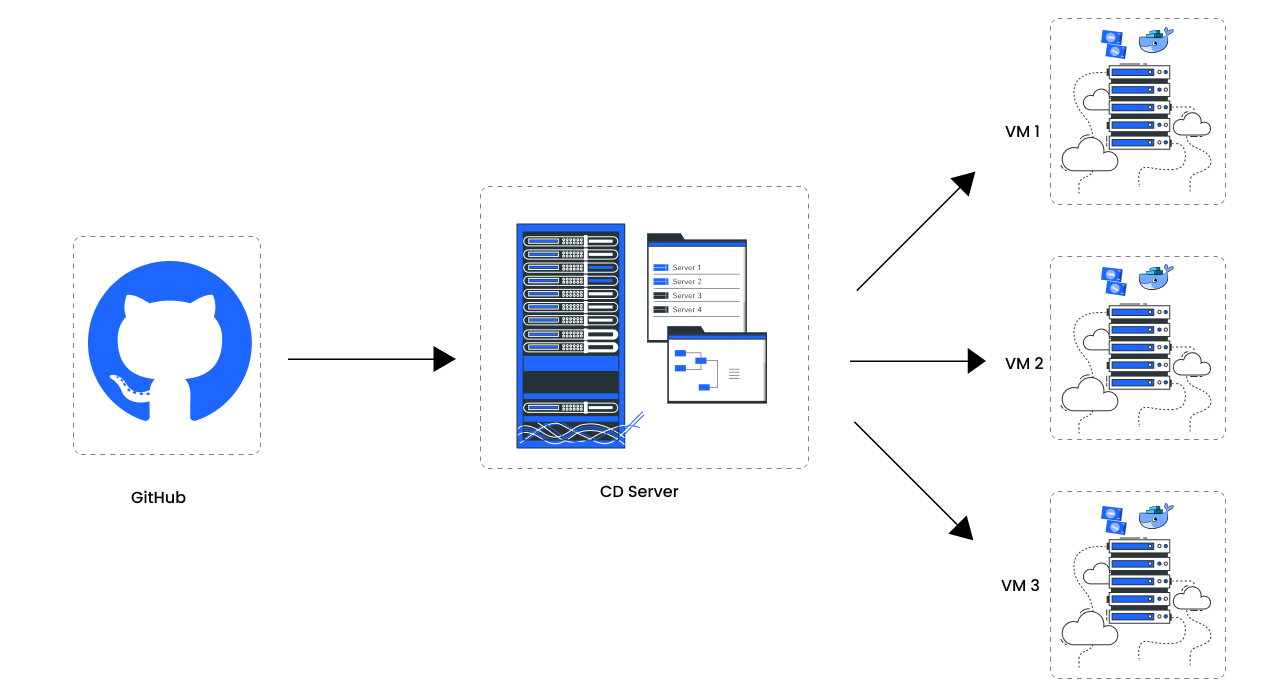

Our new flow will look something like this:

Congratulations! You just set up what we call a CD pipeline.

And no… I don’t mean Colorful Daemons. I’m talking about Continous Deployments.

Continuous Deployments is a piece of software responsible for taking your app from something like GitHub all the way to your target environment where it finally gets deployed.

This is basically the CI/CD stuff you keep hearing about. When people talk about tools like Jenkins and CircleCI, they are usually referring to CI/CD.

What we just made with Colorful Daemons was a continuous deployments pipeline. Don’t confuse it with continuous integration or delivery. We’ll get to those some other day.

The DevOps pattern

I guess you’ve already found a pattern here. We start with a process, find a section we aren’t happy with and then introduce some software component to simplify or automate it.

That’s getting the dev in ops. And that’s all that there is to it.

This is the real answer to the question, “What is DevOps?".

Introducing Container Orchestration

Let’s finish up by making one small improvement.

Till now, we have been dealing with deploying our app to a single VM or a single node. What if we wanted to deploy our app to multiple nodes?

The easiest way to achieve this would be to modify our CD server to ssh into all the VMs and deploy our container to each one of them.

While this method works, we’ll need to change our script every time our infrastructure changes. In a world where applications are always autoscaling and VMs are considered disposable, this is unacceptable.

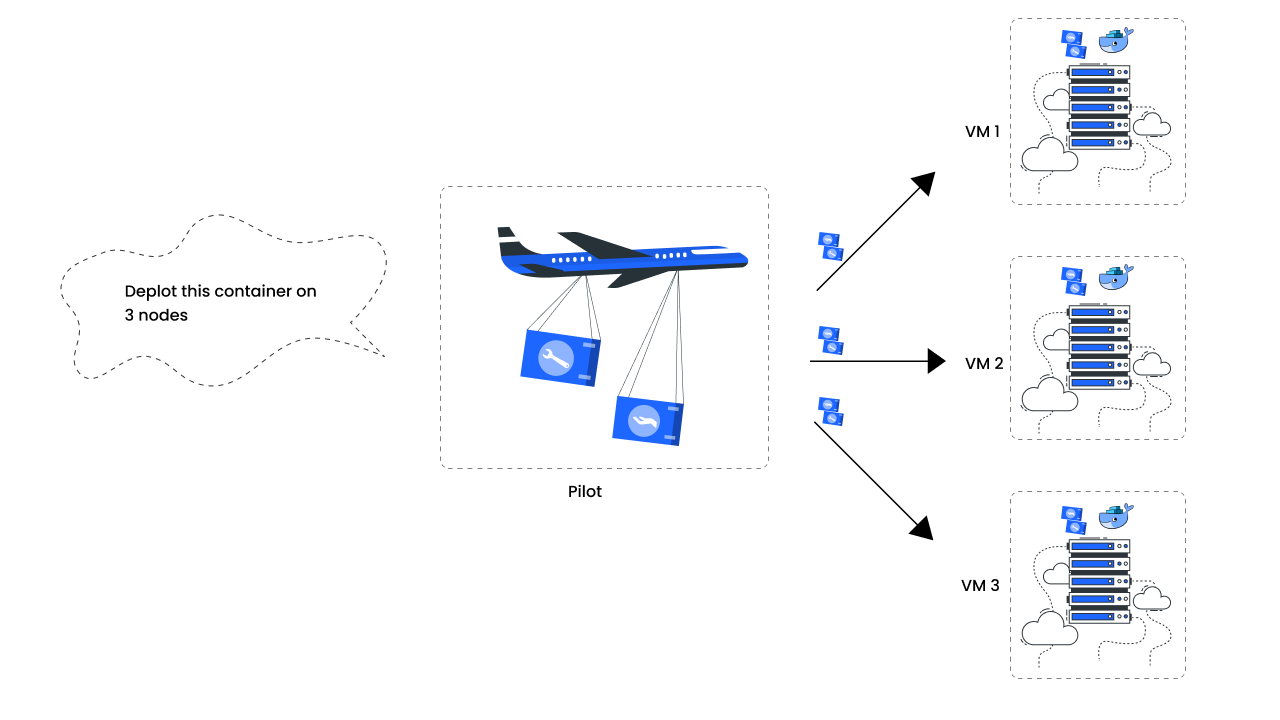

A better way would be to make another HTTP server to track infrastructure changes. We can call this server, “Pilot”.

This server will be responsible for performing health checks on the various VMs in our cluster to maintain a list of active VMs. It could even communicate with the cloud vendor to make things more robust.

Pilot will expose an endpoint as well, to accept the details of the container to spawn. It can then talk to the various VMs to get the job done.

Now, our CD server can simply request Pilot instead of talking to each VM individually.

Our new flow will look something like this:

The second server, Pilot, is called a container orchestrator. That’s what Kubernetes is!

You just designed a mini version of Kubernetes!

Also, Kubernetes is greek for Pilot. Isn’t that a pleasant co-incidence?

Where to Start?

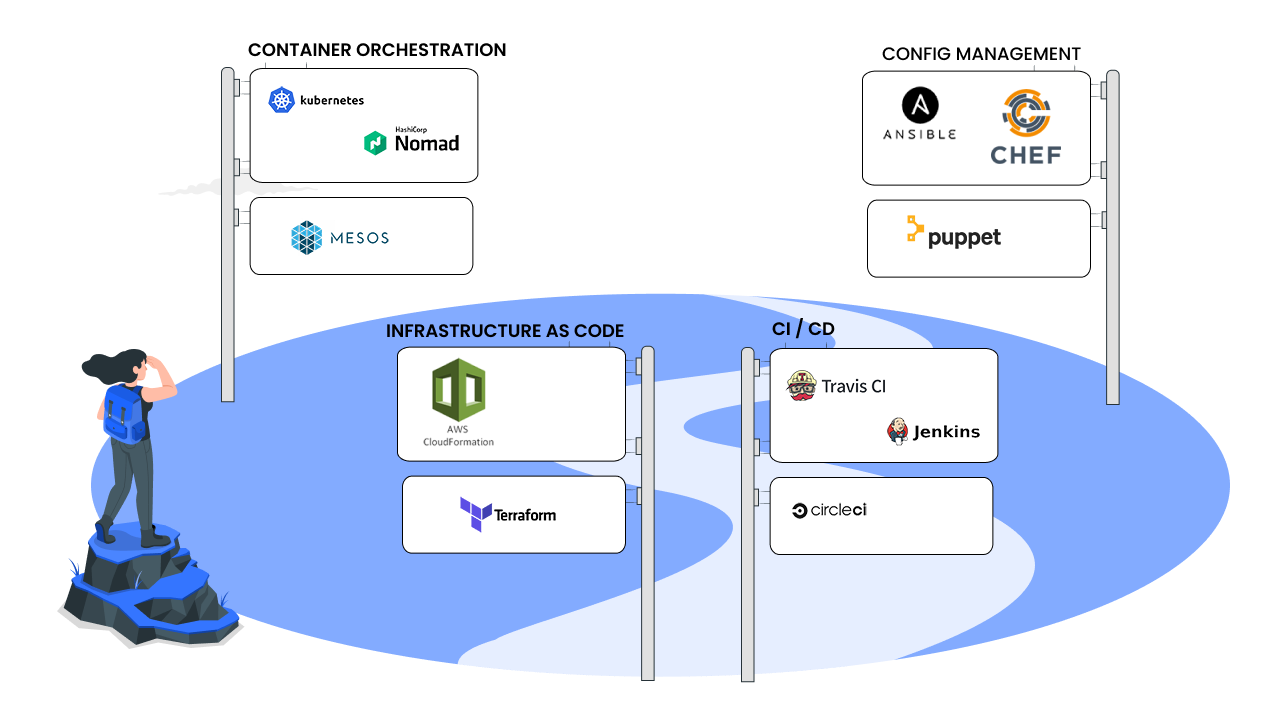

We covered quite a few tools together. This brings me to my last point. Ever wondered why the DevOps space is so fragmented?

If you think about it. There are so many tools out there making it hard to decide what’s the right choice or where you should even start?

Every organisation has their own way, their own process to do things, And since their paths are different, the tools they need to use are different.

Your job is not to find which tool is the best. Your job is to find what process works for you best. Once you have that figured out, the tools are just a google search away.

So now you know where to start. It’s not with the tools out there.

Start by understanding how your company & teams does things.

I’m literally asking you to open up a Word document, and copy-paste the commands you need to run to do stuff.

Wrapping up

I hope this post has been helpful in understanding how the DevOps field is arranged and how different tools depend and coexist with each other.

I’d like to add:

Your DevOps process is only as strong as its foundation.

So work on the underlying process. It’s okay if you need to tweak your current process a bit.

An excellent foundation to build upon could be using tools like SpaceCloud. Space Cloud is a Kubernetes based serverless platform which helps you develop, deploy and secure cloud-native applications.

In a nutshell, SpaceCloud gives you an excellent starting point to build your DevOps practices on top of. It makes performing rolling upgrades, canary deployments and autoscaling your applications easy. You can configure everything using the space-cli or REST APIs.

Enough of me bragging, check out what Space Cloud is capable of for yourself!

You can also support us in our mission by giving us a star on Github.

Did this article help you? How do you make sure your apps are cloud-native? Share your experiences below.